Prompt-2-Paper: Automatic Scientific Testing via LLM-run experiments.

Leveraging Large Language Models to Build and Execute Computational Workflows

September, 2023. Published in WORKS23 workshop at the International Conference for High Performance Computing (SC23).

An ecosystem LLM agents. A key insight is supervising their process, i.e., following the scientific method of hypothesis, experimentation and analysis, instead of supervising outcomes (i.e., supervised training data).

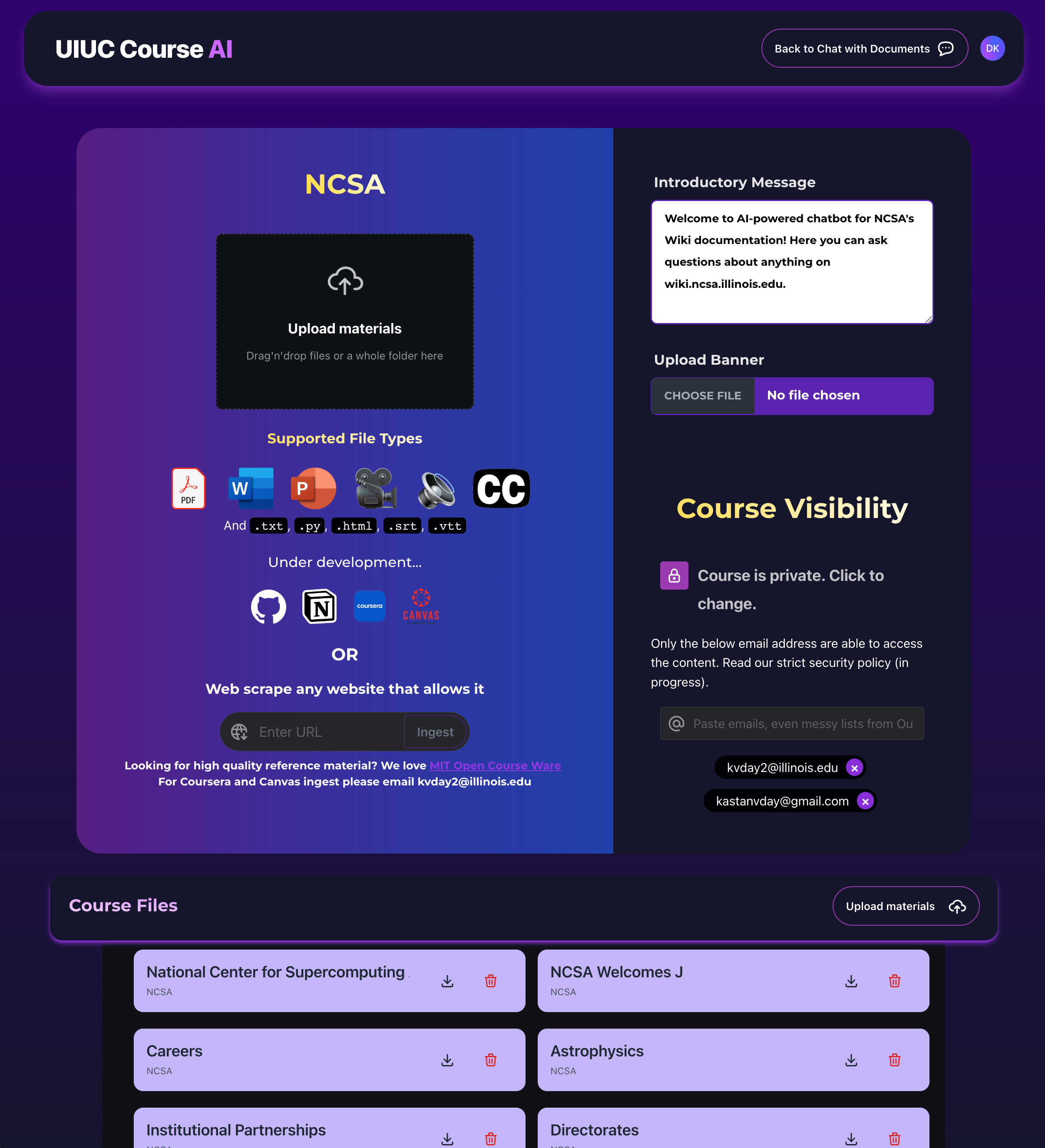

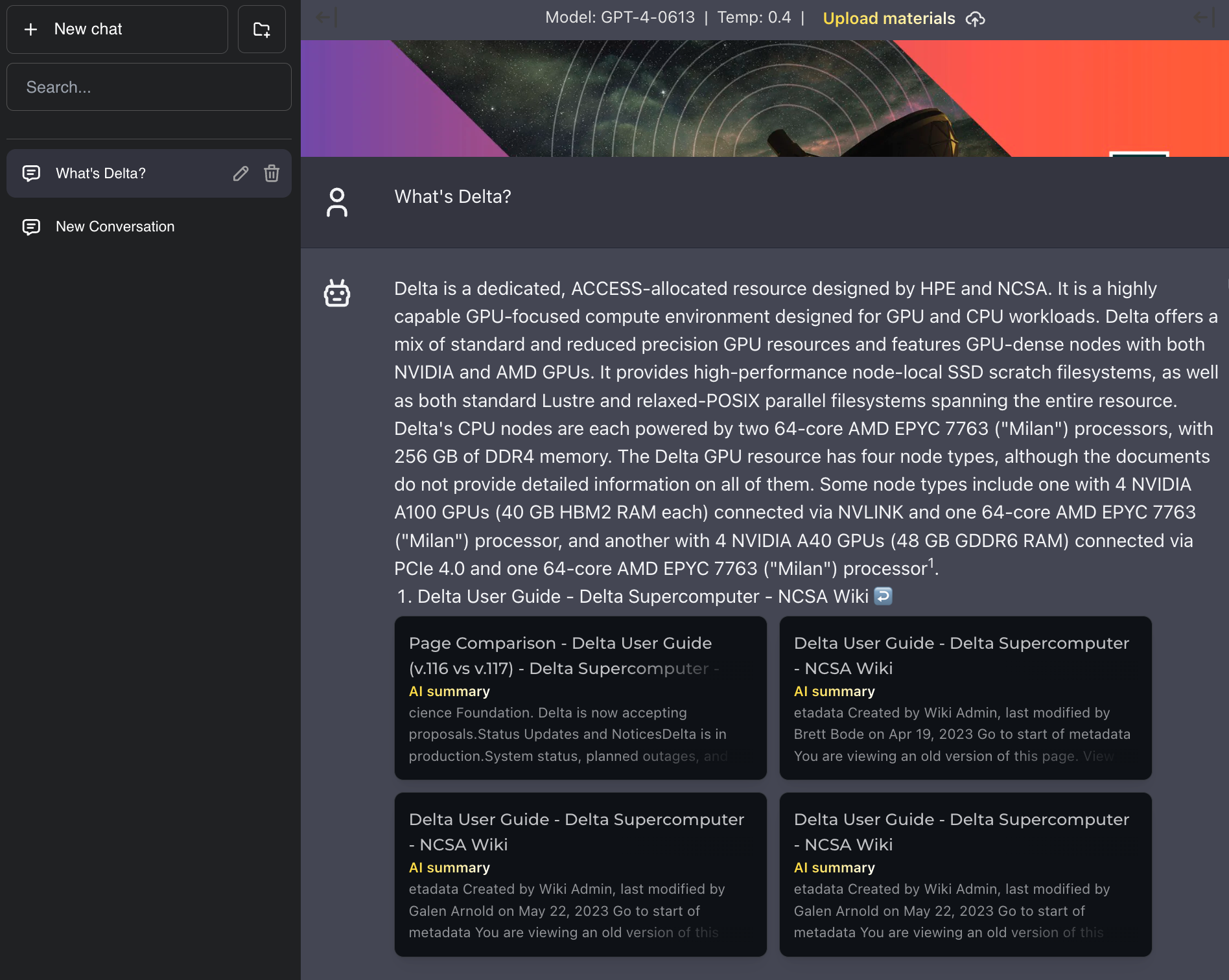

Lowest effort, highest factuality custom chatbot

uiuc.chat

knowledge extraction

May 2023 – Present

Upload anything, search everything, get answers. Factual QA against your knowledge base using prompt stuffing.

It’s all free & open source backend and frontend code.

Try it for free, and share a link to your knowledge with friends & colleagues. Upload any collection of documents, like your entire collection of research papers, and ask difficult questions for GPT-4 to synthesize an answer. Of course, we even cite our sources from your own documents.

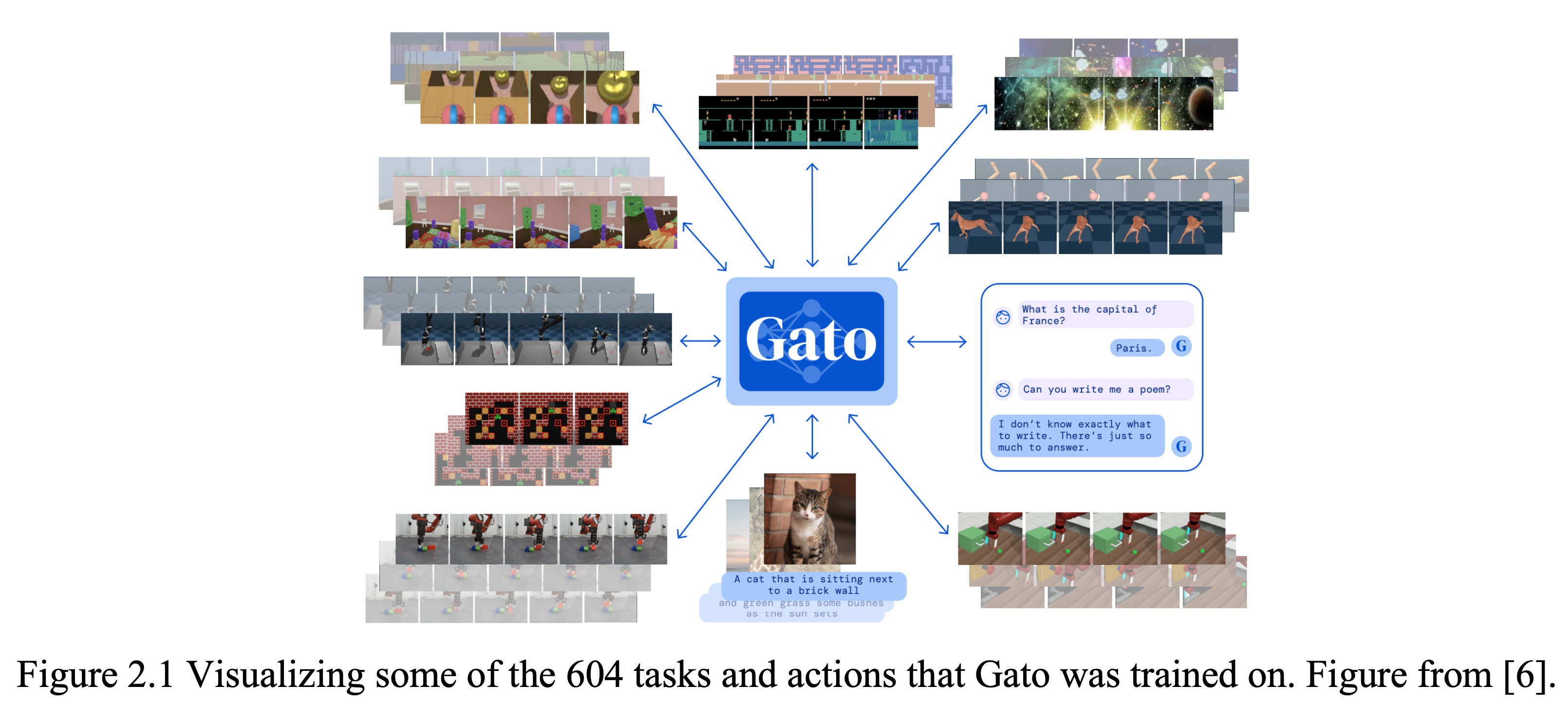

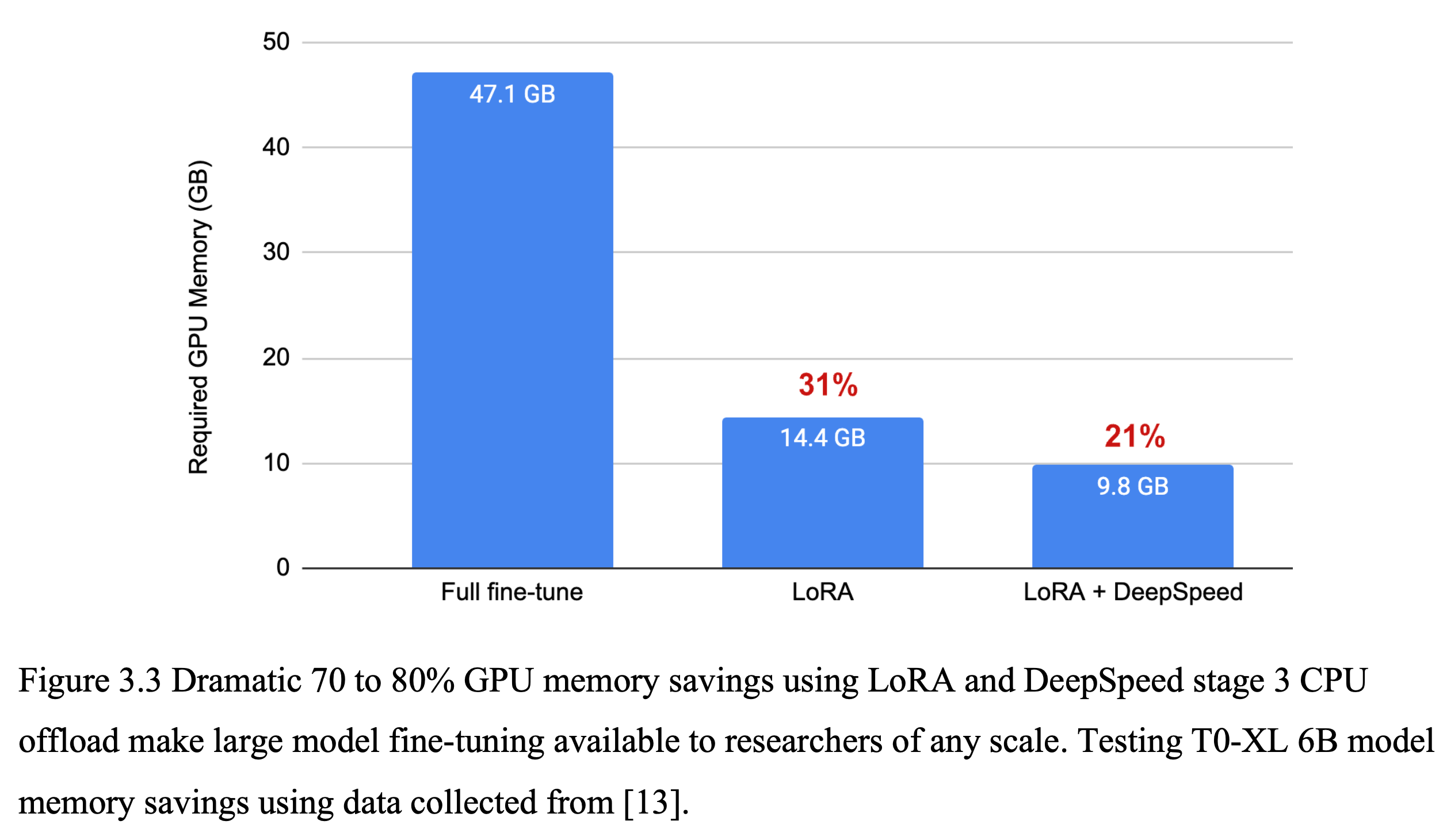

My Master’s Thesis

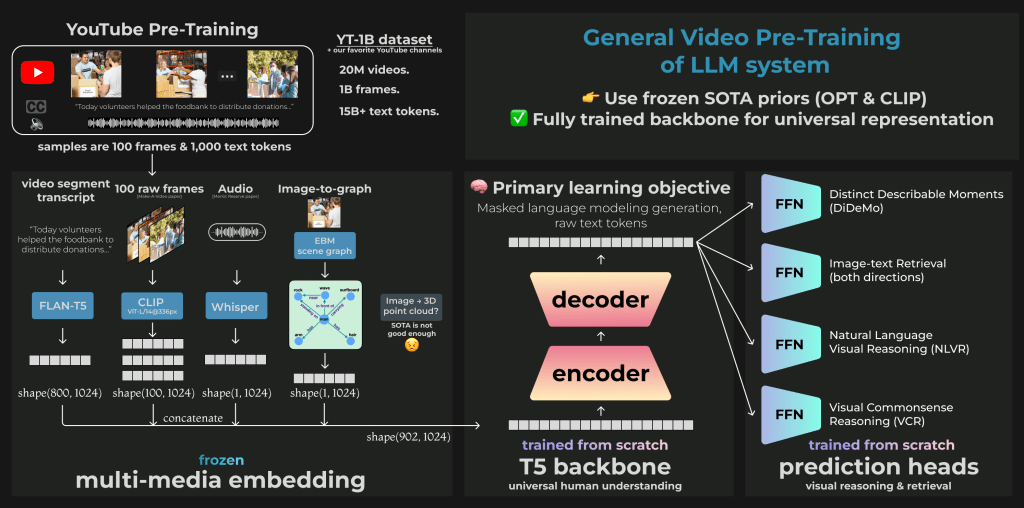

Training a Massively Multimodal Transformer on YouTube Data

May 2023

Selected Figures

My favorite ideas from grad school

- Review of Parameter Efficient Fine-Tuning (PEFT) methods

- Debunking the True Purpose of RLHF

- Multimodal Transformers & the three key architectures to combine any set of modalities:

- Cross Attention (aka standard encoder-decoder attention)

- Small MLP Mapping Projection

- Joint Embeddings with Contrastive Learning

- Introducing VPT, a massive combination of pretrained LLMs using the above techniques.

Custom Transformer Architecture

Video Pretrained Transformer: A Multimodal Mixture of Pre-trained Experts

September – April 2023

Custom Architecture: 3D CNN + RNN for Spacetime Modeling

Winner of Argonne National Laboratory‘s AI Hackathon

January 2022

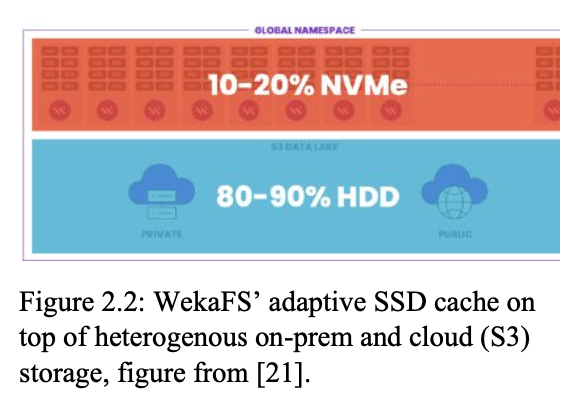

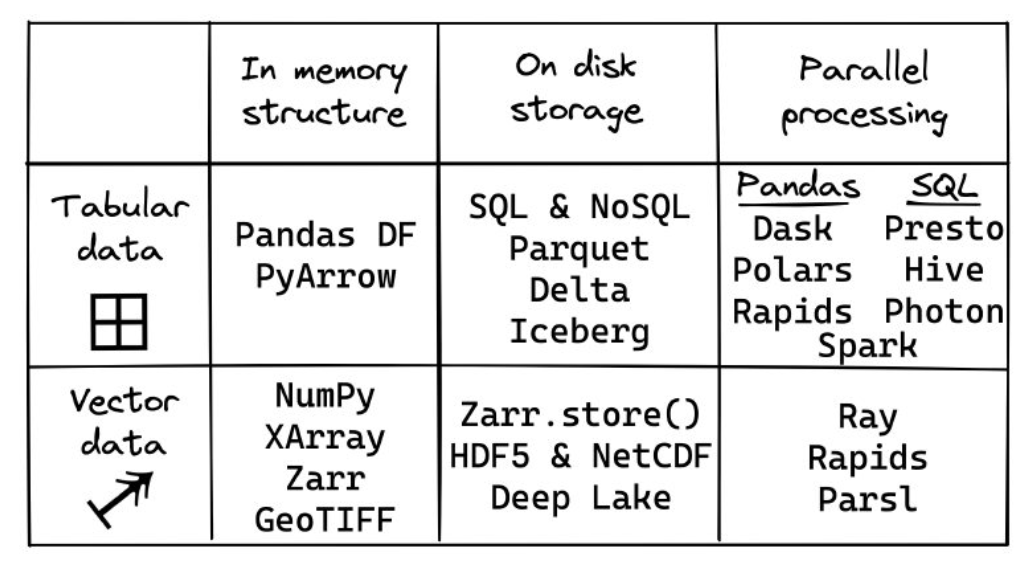

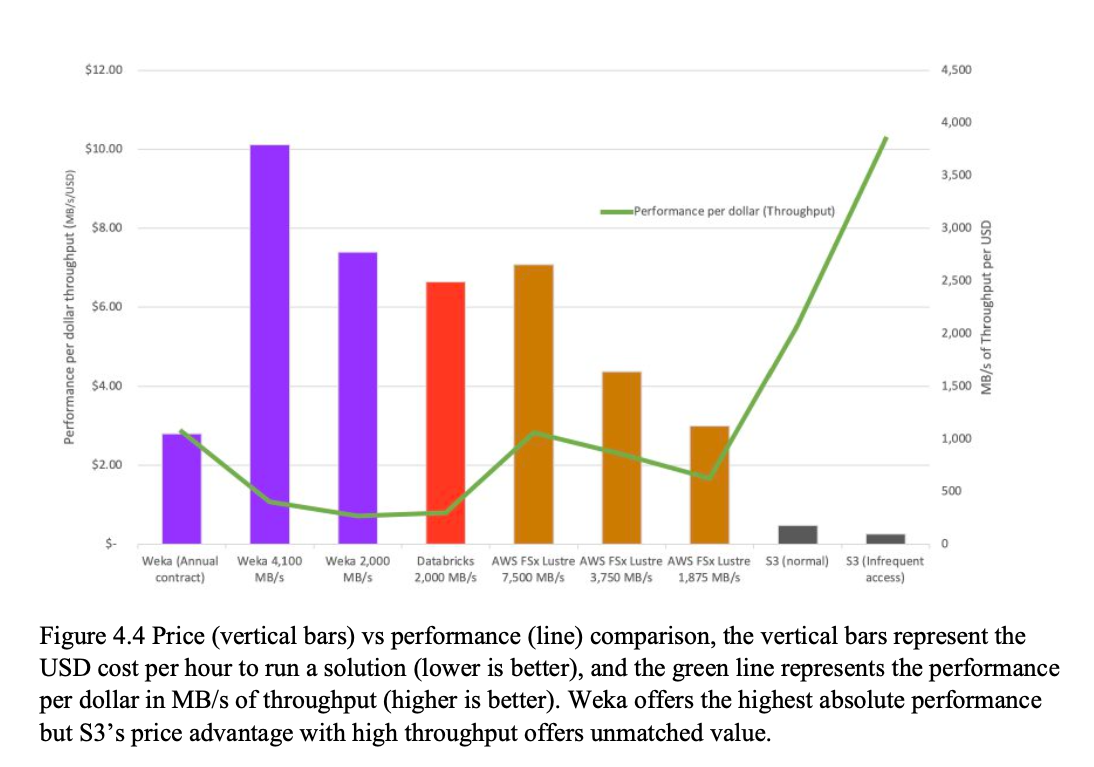

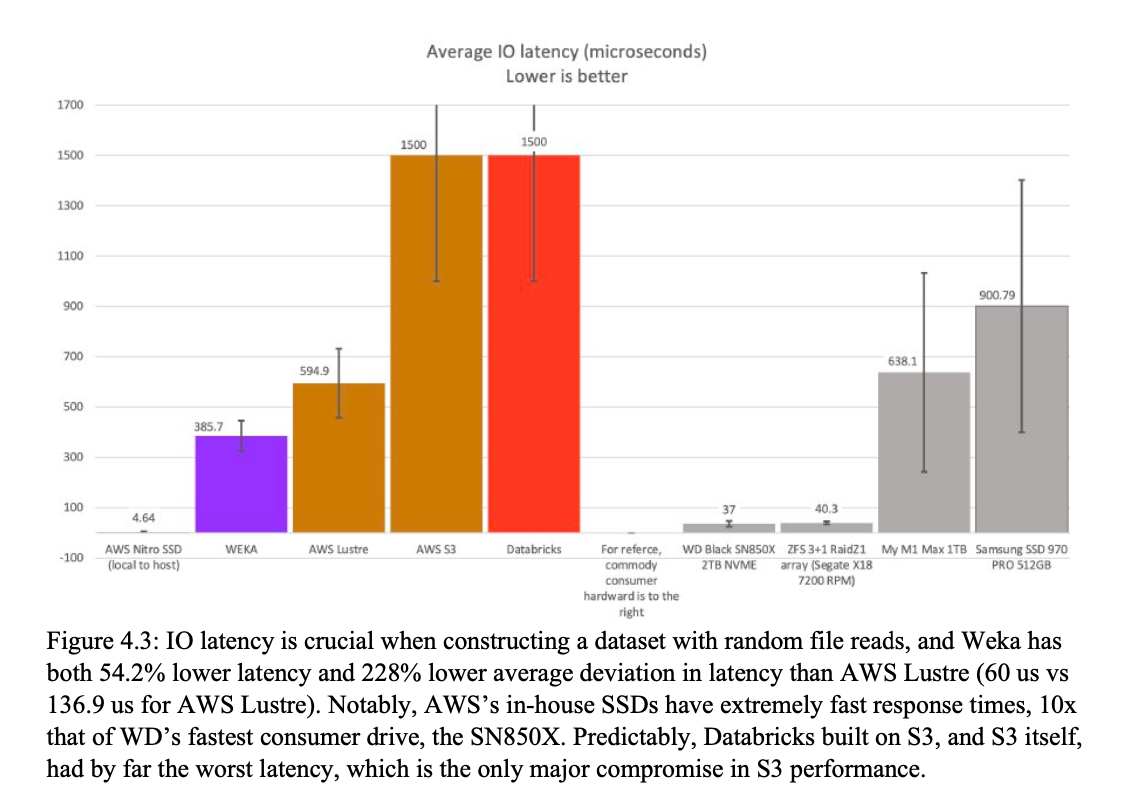

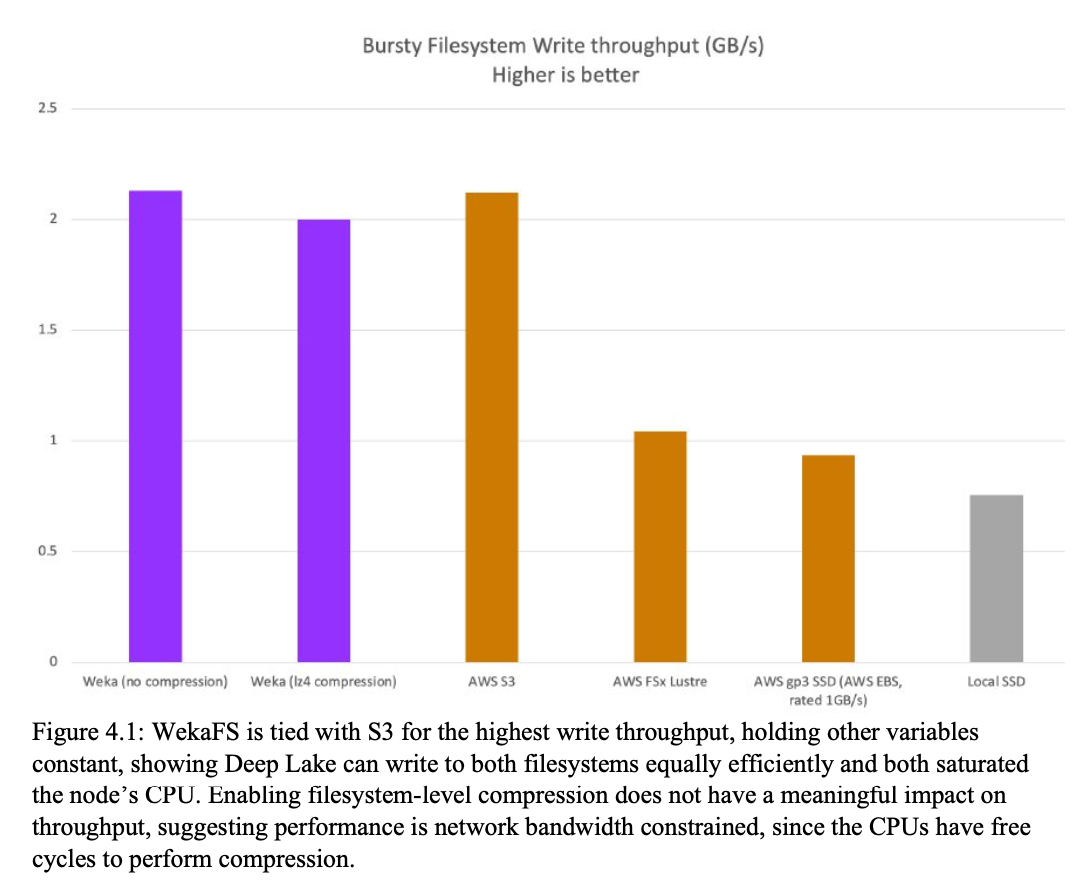

Cheapest, easiest cloud storage of multimodal datasets

Optimal Database and File system for Multimodal AI Training in Modern Multi-cloud Environments

May 2023

Selected Figures

Image Generation Before Diffusion

Unsupervised Fine-grained Image Generation using GANs

September 2017 (2nd year undergrad)

Several years before stable diffusion hit the mainstream, I worked on “AI Avatar generation” to create customizable self portraits with editable features, much like a video game character has hair/glasses/face/etc. features.

AI Apple Watch Health Monitoring

Randomized Controlled Trials (RCTs) for N-of-1 Health Experiments

May 2020 (senior year undergrad)

This research became my first startup, Bioloop Sleep, which was acquired by BetterUp in 2023.

AI@NCSA Announcement

AI@NCSA Announcement